Part 4: Machine construction of the Assessment: Design Level

As we saw in the previous blog posts, an ideal inpatient assessment section of a SOAP note answers (1) who the patient is; (2) why the patient was admitted to the hospital; (3) the relevant recent and long-term clinical history of the patient; and (4) their current clinical status. This installment of the blogpost outlines how advances in artificial intelligence (AI), particularly in natural language understanding and generation, may provide a mechanism to answer each of the four tasks identified in an automated manner. We also briefly consider some of the associated challenges and errors.

Until recently, auto-generating a well-written patient assessment with computers was an altogether impossible task. The activity involves understanding structured and unstructured data related to a patient and then composing their contents into a grammatically correct, succinct, clinically nuanced and fluent paragraph. The machine also has to make accurate judgments on what matters, since a patient’s history and context may be voluminous. Early advances in neural natural language processing that solved tasks such as text classification (Kim, Y., 2014), named entity recognition (Chiu, J. P. & Nichols, E., 2016), and sentiment analysis (dos Santos, C. & Gatti, M., 2014) fell short on tasks that involved fluid sentence, paragraph, or longform text composition. Although neural networks have formed an integral component in the technology stack beneath systems such as Google Translate, the technology only began to approach human level fluency with the advent of a particular neural network architecture named transformers (Vaswani et al., 2017). If you are interested in learning more, Formal Algorithms for Transformers (Phuong, M. & Hutter, M., 2022) provides a concise but technical introduction to transformers; for a friendlier introduction see The Illustrated Transformer (Alammar, J., 2018).

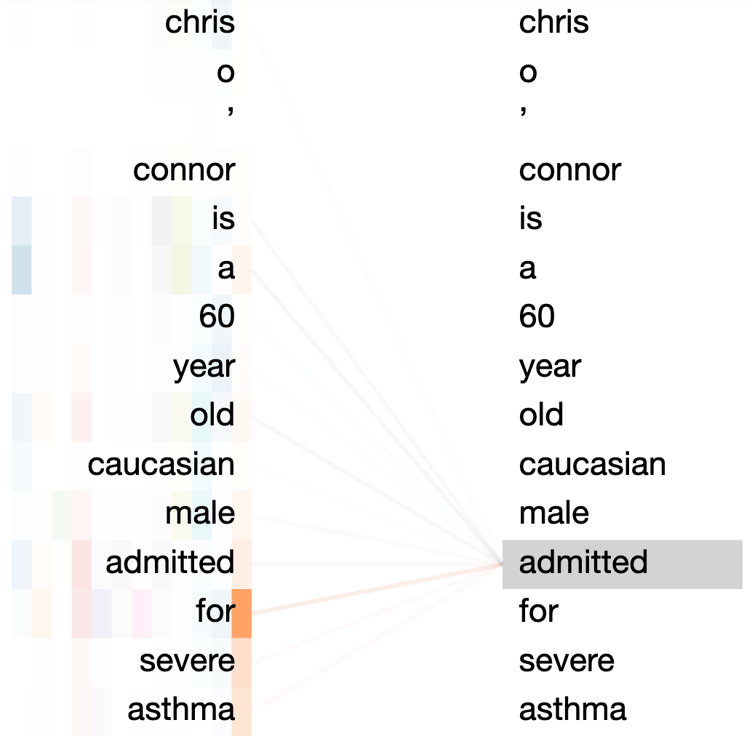

Transformers are powerful because they connect all possible combinations of neural nodes and employ a mechanism called self-attention to prioritize the relevant connections. Given the sentence ‘Chris O’Connor is a 60 year old caucasian male admitted for severe asthma’, earlier sequential neural network architectures used in Natural Language Processing (NLP) could, practically, interpret the word ‘admitted’ only using a limited number of words on either side. A transformer, by contrast, will be able to effortlessly use the entire sentence as context, as shown in Figure 2. In fact, standard transformer architectures can exploit context lengths from 512 words to 1024 words or more. The ability to exploit such long range dependencies makes transformers very good at language modeling, the task of predicting the next or missing span of text in a sequence given a context.

Figure 2: With respect to the word ‘admitted’, BERT assigns highest attention weights to the words ‘for’, ‘severe’, and ‘asthma’ (line weights correspond to assigned attention weights).

In the past five years, a number of large transformer language models (LLMs) such as BERT (Delvin et al., 2018), GPT (Radford et al., 2018), T5 (Raffel et al., 2020), BART (Lewis et al., 2020) and more recently BLOOM (Le Scao et al., 2022) trained on massive text corpora have been successfully adapted for downstream natural language understanding and generation tasks such as translation, summarization, and question answering. LLMs encode both linguistic and relational knowledge and are able to generate long text sequences that are grammatically correct and logically consistent. At Pieces, we believe that leveraging LLMs with adequate corrections for their weaknesses offers a path towards automatically generating accurate and fluent patient assessment notes.

It is useful to consider the patient assessment generation task in terms of its inputs and outputs. As input we have structured, semi-structured and unstructured data. A patient’s electronic medical record (EMR) will contain fixed or structured fields such as a name, age, and address. On top of that, we also have access to critical information relating to the patient in freeform, natural language, or unstructured text. Unstructured patient data include, for example, physician progress notes, nurse observations, narrative case management entries, and operating notes while semi-structured data refers to records such as tabular test results supplemented by laboratory technician’s comments or a radiologist’s diagnosis. Pieces Predict, our proprietary AI engine, adds to this mix of patient data various estimations of risk, classification and clinical prioritization. Our task is to utilize all of this data and produce a patient assessment note as output, as described in Figure 3. Assessment generation is, thus, a complex, multi-document summarization task.

-1.png?width=711&height=400&name=Copy%20of%20Copy%20of%20PIECES_Demo%20Day%20Slide%20Deck%20Draft%20(4)-1.png)

Figure 3: Architecture for LLM-based generation of patient assessment

The dominant paradigm for tackling text summarization today involves fine-tuning an LLM on a related dataset. As a concrete example, engineers tasked with building an automatic news summarizer will, for instance, fine-tune BART on the CNN/DailyMail dataset (Hermann et al., 2015) which has 300,000 news articles from CNN and Daily Mail alongside their respective few-line summaries. The fine-tuned LLM can be thought of as a mathematical function that maps between a news article and its corresponding abstract. Since this function now encodes both linguistic as well as relational knowledge, it can return summaries for previously unseen news articles as well.

In the case of generating patient assessment summaries, however, utilizing LLMs is not as straightforward. Since LLMs are typically pre-trained on text corpora acquired through crawling the web, they are likely to lack clinical medicine knowledge out-of-the-box, requiring additional domain specific pre-training (Gururangan et al., 2020; Lewis et al., 2020). Moreover, as Emily Alsentzer points out, there is a high prevalence of abbreviations in clinical text and clinicians do not often write in complete sentences. Clinical text contains large volumes of duplicated data resulting from overworked clinicians simply copying between notes. Further, standard medical notes tend to vary significantly across hospitals and clinicians templates, leading to potential problems of data divergence. Semi-structured text such as tabulated data or lists can present their own set of challenges while privacy related concerns might mean that critical data has to be anonymized or truncated before feeding to a model.

The foregoing discussion illustrates common challenges with respect to applying LLMs for any clinical natural language processing task. When it comes to generating patient assessment notes, there is also the fundamental issue of creating adequate datasets containing instances of the correct input and output pairs that can provide task specific fine tuning supervision. The third constituent element of an assessment, namely the relevant clinical history to be surfaced at a given stage of a patient’s progression, is particularly tricky. At the basic level, a patient’s electronic medical chart can be long, sometimes containing thousands of pages of numbers and text structured in a time-series, and even transformers that handle input sequence lengths of 2048 words may not be able to process these histories. If one has to summarize a 3000-word news report with a transformer that can handle a sequence length of 2048 words, simply attending to the first 2048 words and ignoring the remaining ones would be a perfectly valid approach given that news articles typically display a lead bias: the initial paragraphs contain the most salient information because of their inverted pyramid writing structure. However, LLMs would be difficult to employ in the same manner on even a single hospital encounter with hundreds and very often thousands of pages of semi-structured and purely narrative text. This suggests the need for a substantial clinically oriented pre-processing scheme.

The final challenge of employing LLMs for assessment generation is that they introduce their own set of errors. Auto generated assessments for patients with rare or novel medical conditions are likely to contain errors since an LLMs’ output is constrained by its training. Hallucinating or forging of new entities and concepts not present in the source document in the summary is also a well observed phenomenon in text summarization using LLMs (Maynez et al., 2020). While the consequences of such errors may be limited in other domains, the demands of clinical medicine insist on a zero tolerance for summarization errors of any type. There are also serious ethical considerations. As Diane Korngiebel and Sean Mooney highlight in their Nature Medicine article, LLMs are prone to reproducing, or even amplifying, societal biases and prejudices and their outputs, therefore, need to be pruned for harmful and inappropriate language (Korngiebel, D. M. and Mooney, S. D., 2021). At Pieces we are therefore focused on robust error detection frameworks, both human and non-human, and supplement the automatic summarizer with comprehensive post-editing processes necessary to screen and correct machine generated assessment notes.

LLMs, situated within a persistent and intelligent controlling infrastructure, provides a feasible path for the automatic generation of fluent and accurate patient assessment notes. LLMs are unlikely to work out-of-the-box, due to both domain and task specific challenges. In our view, numerous additional systems are required to make this work. In the next installment of the blogpost, we will introduce Pieces’ framework for evaluating machine produced patient assessment notes.

Artificial Intelligence and the Restoration of the Medical SOAP Note:

- Part 1: Ode to the Soap note

- Part 2: Assessment, Deconstructed

- Part 3: Icing on the "Assessment": Customization and Elaboration

Thank you to Elijah Hoole , NLP Technical Specialist, for contributing to Part 4 of this series.

Ruben Amarasingham, MD, is the founder and CEO of Pieces, Inc. He is a national expert in the design of AI products for healthcare and public health, and the use of innovative care models to reduce disparities, improve quality, and lower costs. Follow him on LinkedIn or Pieces to catch the next installation of this series.

1 Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. https://arxiv.org/pdf/1408.5882.pdf

2 Chiu, J. P., & Nichols, E. (2016). Named entity recognition with bidirectional LSTM-CNNs. Transactions of the association for computational linguistics, 4, 357-370

3 Cícero dos Santos and Maíra Gatti. 2014. Deep Convolutional Neural Networks for Sentiment Analysis of Short Texts. In Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, pages 69–78, Dublin, Ireland. Dublin City University and Association for Computational Linguistics.

4 Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., ... & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

5 Phuong, M., & Hutter, M. (2022). Formal Algorithms for Transformers. arXiv preprint arXiv:2207.09238.

6 Alammar, J. (2018). The Illustrated Transformer. https://jalammar.github.io/illustrated-transformer/.

7 Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

8 Radford, A., Narasimhan, K., Salimans, T., & Sutskever, I. (2018). Improving language understanding by generative pre-training.

9 Raffel, C., Shazeer, N., Roberts, A., Lee, K., Narang, S., Matena, M., ... & Liu, P. J. (2020). Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res., 21(140), 1-67

10 Lewis, M., Liu, Y., Goyal, N., Ghazvininejad, M., Mohamed, A., Levy, O., ... & Zettlemoyer, L. (2020, July). BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (pp. 7871-7880).

11 Scao, T. L., Fan, A., Akiki, C., Pavlick, E., Ilić, S., Hesslow, D., ... & Manica, M. (2022). BLOOM: A 176B-Parameter Open-Access Multilingual Language Model. arXiv preprint arXiv:2211.05100.

12 Hermann, K. M., Kocisky, T., Grefenstette, E., Espeholt, L., Kay, W., Suleyman, M., & Blunsom, P. (2015). Teaching machines to read and comprehend. Advances in neural information processing systems, 28.

13 Gururangan, S., Marasović, A., Swayamdipta, S., Lo, K., Beltagy, I., Downey, D., & Smith, N. A. (2020). Don't stop pretraining: adapt language models to domains and tasks. arXiv preprint arXiv:2004.10964.

14 Lewis, Patrick, et al. "Pretrained language models for biomedical and clinical tasks: Understanding and extending the state-of-the-art." Proceedings of the 3rd Clinical Natural Language Processing Workshop. 2020.

15 PurdueOWL. 2019. Journalism and journalistic writing: The inverted pyramid structure. Accessed: 2022-11-10.

16 Maynez, J., Narayan, S., Bohnet, B., and McDonald, R. (2020). On faithfulness and factuality in abstractive summarization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 1906–1919, Online. Association for Computational Linguistics.

17 Korngiebel, D.M., Mooney, S.D. Considering the possibilities and pitfalls of Generative Pre-trained Transformer 3 (GPT-3) in healthcare delivery. npj Digit. Med. 4, 93 (2021). https://doi.org/10.1038/s41746-021-00464-x